Knott Laboratory provides forensic engineering and animation, Civil & Structural, and Fire & Explosion Investigation services to reconstruct accidents.

How to Reconstruct an Accident Using Video Footage

Knott Laboratory analyzes accidents captured on video using matchmoving or "camera tracking".

Published February 6, 2018

By Angelos G. Leiloglou, M. Arch.

Cameras surround us in our everyday lives. Businesses have surveillance cameras, police have body and dash cameras, buses and various commercial vehicles have dash cameras, and seemingly everyone has smart-phone cameras. With this widespread use of cameras, one of the first things that is done after an accident/catastrophic event is secure any video footage that may have captured the accident. Such accidents can include, but are not limited to:

- Falling objects resulting in injury

- Pedestrian/bicycle accidents

- Police shootings

- Slip/Trip-and-falls

- Vehicle accidents

The most commonly asked question raised regarding video footage is “can we reconstruct the accident using the video footage?” The answer is yes, many times you can, even with limiting factors such as low-resolution video or the accident occurring near the extents of the camera frame.

When receiving video footage, Knott Laboratory applies the scientifically validated method of matchmoving or “camera tracking.” Matchmoving is a technique based upon photogrammetry, which is the science of attaining measurements from photographs or images. Accordingly, matchmoving is simply the application of photogrammetry on a sequence of individual images (i.e. video frames).

The matchmoving process analyzes the 2D information (x,y) from a video and converts it into 3D information (x,y,z) about the camera and scene. When done correctly, this technique allows computer generated, 3D virtual objects to be accurately composited into the video footage with correct position, scale, and orientation. The process generally involves the following 6 steps:

Lens Distortion Correction:

Before the matchmoving process can be performed, a major factor that must be addressed is lens distortion, which creates curvature or distortion near the edges of an image. Lens distortion correction techniques are used to undistort the image. Such techniques include calibration of the subject or exemplar camera, through the matchmoving software, or through manual correction.

2D tracking:

Once the lens distortion is corrected, the matchmoving process begins. The first step to the matchmoving process is identifying 2D points in the video image and tracking those points throughout the image sequence using 2D trackers.

Application of Constraints:

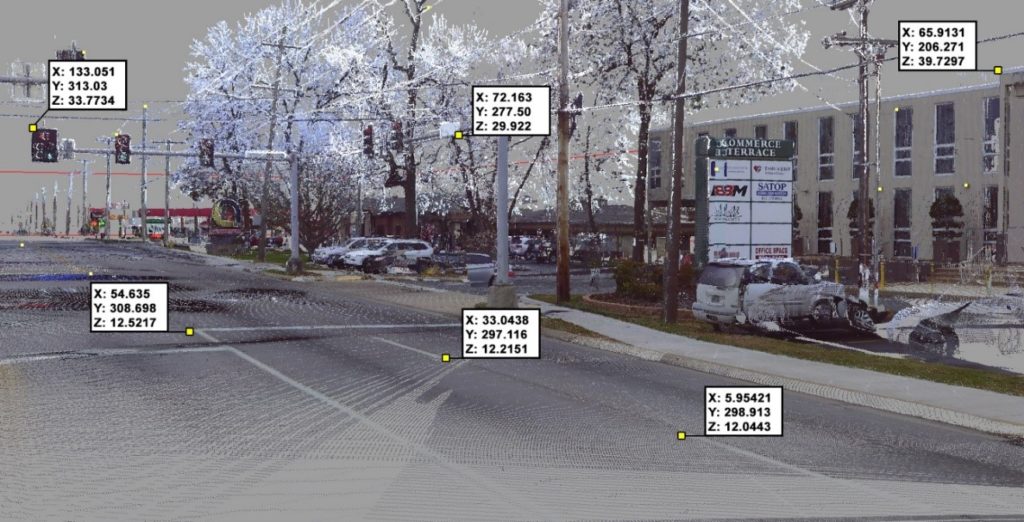

After the 2D tracking step has been completed, the software technically has enough information to attempt to solve for or calibrate the virtual camera that matches the real-world camera. However, to increase the likelihood of an accurate calibration, it is highly beneficial to apply constraints, or known 3D global positions of points seen in the video image. High definition scanning of a scene is a very powerful tool that can be used to identify constraints.

3D Calibration:

After 2D tracking and constraints are applied, the next step is 3D calibration. The goal of 3D calibration is to determine the exact camera movement and optic properties of the real-world camera that was used to record the video, and to reproduce a 3D virtual camera that “matches” it. This is performed by the matchmoving software.

Object Tracking/Matching:

After calibrating the virtual camera in a virtual scene, the motion of objects depicted in the video such as vehicles, pedestrians, or other objects can be determined through the process of object tracking or object matching.

The process of object matching involves viewing the video footage through a virtual camera in the virtual scene, and positioning a surrogate virtual model, with the same size and geometry as the real-world objects, so that it matches the object’s position relative to the point cloud as depicted in each frame of the video.

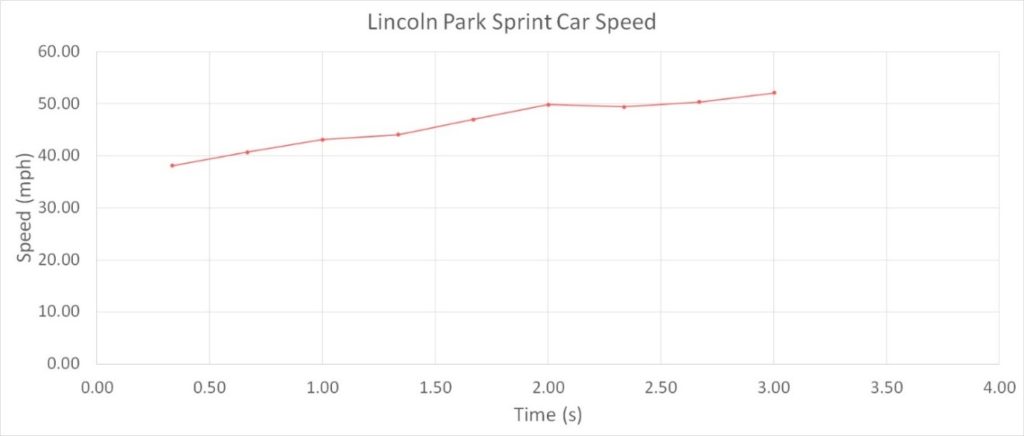

Analyzing Object Dynamics:

Once an object has been tracked/matched, the 3D translation (x, y, z) and orientation (roll, pitch, yaw) data of that object, for each frame, is exported from the 3D animation program and imported into an Excel spreadsheet where the object’s motion data (i.e. speed, acceleration, heading angle, etc.) is calculated and graphed. The object’s motion data is then evaluated to confirm that its motion is in line with the laws of physics.

Knott Laboratory has reconstructed numerous accidents using the scientifically validated method of matchmoving. If you want more information regarding matchmoving, or if you want to determine if matchmoving can be applied to your video footage to reconstruct an accident, please contact Knott Laboratory at (303) 925-1900.